Starting up a VM in CGP for LLMs

Blog post description.

CLOUDLARGE LANGUAGE MODELS

Creating a 80GB A100 instance on Google Cloud

Set up a billing account.

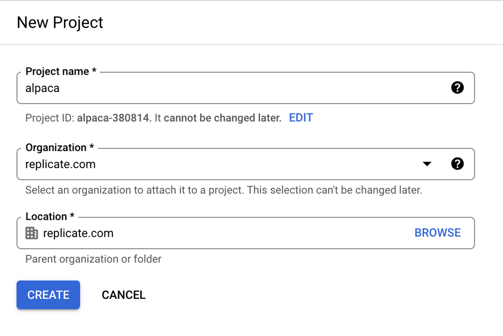

Create a new project called “alpaca” and copy and paste the generated project ID.

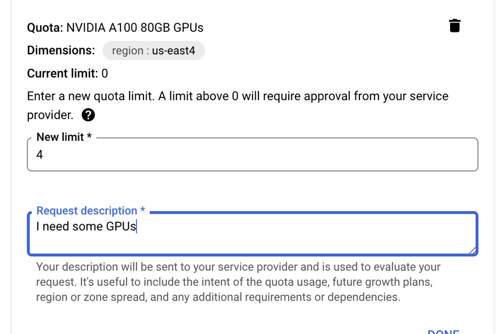

Go to https://console.cloud.google.com/iam-admin/quotas and request a quota increase for compute.googleapis.com/nvidia_a100_80gb_gpus in us-east4. Note that this may take several hours, sometimes even a couple of days.

Run gcloud auth login to login using your browser.

Run gcloud init . Select the project you created and the zone us-east4-c.

Run this to create an instance:

gcloud compute instances create \

alpaca \

--project=alpaca \

--zone=us-east4-c \

--machine-type=n1-standard-8 \

--accelerator type=nvidia-tesla-t4,count=1 \

--boot-disk-size=1024GB \

--image-project=deeplearning-platform-release \

--image-family=common-cu113 \

--maintenance-policy TERMINATE \

--restart-on-failure \

--scopes=default,storage-rw \

--metadata="install-nvidia-driver=True"

Wait a few minutes, and then log in to the new instance:

gcloud compute --project=alpaca ssh --zone=us-east4-c alpaca

Remember to switch it off when you’ve finished:

gcloud compute instances stop alpaca